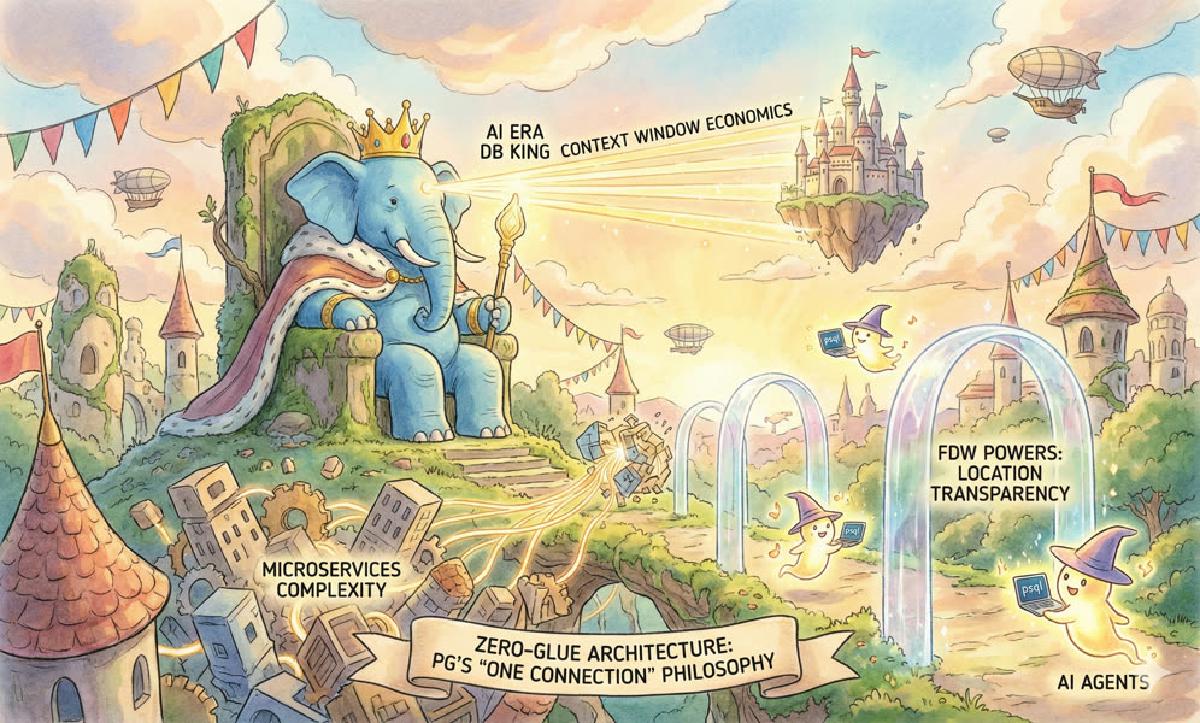

In the Agent era, the underlying logic of software architecture has changed.

Over the past decade, we’ve built microservices and “polyglot persistence” to accommodate the collaboration boundaries of human teams, fragmenting our systems into scattered pieces. But in the new paradigm of rising AI Agents, this fragmented architecture is becoming an expensive form of “technical debt.”

The scarcest resource is no longer storage or compute—it’s the LLM’s attention bandwidth (Context Window).

The complexity and fragmentation brought by microservices are now levying a massive “cognitive tax” on AI Agents. And the antidote to this poison is PostgreSQL. Let’s discuss why PG will become the “database king” of the AI era.

— A lightning talk by Feng at the “8th China PostgreSQL Ecosystem Conference”

Polyglot Persistence: A Nightmare of Cognitive Fragmentation#

In traditional “best practices,” we’re accustomed to scattering data everywhere: MySQL for transactions, Redis for caching, MongoDB for documents, Elasticsearch for search, Milvus for vectors.

This design philosophy is called “Polyglot Persistence”—using multiple data storage technologies within a single system to meet different storage needs.

It sounds great in theory—“use the right tool for the right job.” However, it creates a highly adversarial environment for AI Agents (and human engineers alike).

Agents primarily operate within the boundaries of their context window. This finite buffer—whether 8k, 128k, or 1M tokens—is everything the Agent has: short-term memory, working drafts, interface definitions, all crammed in here.

Imagine a typical cross-domain query: “Find users who purchased product X, visited page Y, and have negative sentiment in support tickets.” Under polyglot persistence, the Agent must endure a “war of attrition caused by data silos”:

- Loading Drivers and Schemas (Burning Cash): The Agent must stuff MongoDB syntax, ES DSL, Neo4j Cypher, and all endpoint schema definitions into its context. Every token spent explaining APIs is a resource stolen from core reasoning capacity.

- Writing Glue Code (High Risk): The Agent is forced to act as a “distributed scheduler,” writing Python to connect three different systems while handling network timeouts, authentication failures, and version mismatches.

- Application-Layer Joins (Inefficient): Data shuttles between systems as the Agent is forced to do data cleaning and joins in limited memory.

This “ping-pong” architecture isn’t just inefficient—it causes context overload. Stuffing all tool definitions into a single mega-Agent rapidly exhausts the budget. When irrelevant schemas and intermediate data fill the window, the LLM’s reasoning ability hits a ceiling, directly causing “hallucinations” to spike.

Context economics favors lean, focused tools and abhors sprawling heterogeneous systems.

PostgreSQL: Zero-Glue Architecture#

What’s the antidote? Unification.

We need a “data operating system” that can solve all problems in one connection, one dialect. Through its unparalleled extensibility, PostgreSQL has long transcended the realm of relational databases, evolving into a versatile data platform.

PG’s philosophy is simple: push complexity down into the database kernel, keeping the Agent lightweight.

Full-Stack Data Fusion: The Trinity#

PG’s extension ecosystem effectively absorbs the capabilities of specialized systems.

In the PG ecosystem, you don’t need to introduce a new database component for every new feature:

| Domain | Extension | Replaces |

|---|---|---|

| Vector Search | pgvector, pgvectorscale, vchord | Milvus, Pinecone, Weaviate |

| Full-Text Search | pg_search, pgroonga, zhparser, vchord_bm25 | Elasticsearch |

| Time Series | TimescaleDB | InfluxDB, TDengine |

| Geospatial | PostGIS | Specialized GIS databases |

| Document Store | jsonb + GIN indexes | MongoDB |

| Message Queue | pgq, pgmq | Kafka |

| Cache | spat, pgmemcached, redis_fdw, unlogged tables | Redis |

| Data Lakehouse | pg_duckdb, pg_mooncake, pg_parquet, pg_lake | ClickHouse, StarRocks |

For Agents, this means unification of the semantic universe. No more mental context-switching between SQL, DSL, and APIs.

More importantly, it democratizes hybrid search. You can perform precise filtering, full-text keyword search, and vector semantic search in a single SQL statement. This isn’t three systems stitched together—it’s an elegant pipeline of operators within one engine.

By converging data logic into a single ACID-compliant PostgreSQL engine, Agents don’t need to worry about eventual consistency in distributed transactions or cross-service data races. Transactions either commit or roll back. This determinism lets Agents treat the data layer as a reliable atomic primitive, not a distributed chaos system full of uncertainty.

FDW: Zero-Glue Architecture and Location Transparency#

What if you genuinely need to access external data? PG’s Foreign Data Wrappers (FDW) give Agents “God mode.”

Through FDW, Postgres can mount anything: DuckDB, MySQL, Redis, Kafka, CSV files on S3, even Stripe’s API or system monitoring metrics.

For Agents, this achieves perfect location transparency. The Agent just needs to execute SELECT * FROM sales_data. It doesn’t know—and doesn’t need to know—whether that data sits in S3 cold storage or a Snowflake warehouse. PG handles all the protocol translation and data movement.

This is the ultimate form of “zero-glue” architecture: Agents no longer need to write hundreds of lines of Python for ETL. They just send a high-density SQL statement declaring what they want.

Stored Procedures: Server-Side Toolbox#

PostgreSQL supports stored procedures in over twenty languages: Python, JavaScript, Rust, and more. This isn’t just functionality—it’s an architectural force multiplier:

- Token Savings: Complex business logic (RAG pipelines, data cleaning) is crystallized in database functions, no longer consuming precious prompt space.

- Security and Sandboxing: Agents call encapsulated functions (Tools), not raw SQL—permission boundaries are clear and controllable.

- Performance: Logic runs adjacent to data, eliminating network I/O overhead—usually the biggest performance bottleneck.

Interface Standardization: psql as IDE#

PG’s SQL dialect and the libpq wire protocol are covered knowledge in virtually all LLM training data. GPT-4 and Claude write PG-style SQL fluently.

By standardizing on Postgres, we provide Agents with a deterministic environment. You don’t even need MCP—you can throw away pymongo, redis-py, neo4j-driver, and all those drivers.

A single psql with a connection string from the command line is all you need to start working. The interface definition simplifies to one line: postgresql://user:password@hostname:5432/db

With just this one connection, an Agent can use pg_net / pg_curl to access the network, FDW to read and write anything, and SQL to orchestrate logic—even execute shell commands.

psql provides a superset of Bash functionality, making it naturally suited to become AI Agents’ next preferred execution environment.

Conclusion#

Context window economics determines the future of software architecture. In a world where intelligence is priced per token and constrained by prompt size, architectural simplicity is the ultimate optimization target.

Polyglot persistence was once a badge of technical sophistication. Now it’s a liability—a source of friction, latency, and token waste. It fractures the Agent’s reality, forcing it to squander cognitive resources on glue code rather than value creation.

PostgreSQL, equipped with pgvector, pg_net, postgres_fdw, and its extension ecosystem, provides a unified, programmable, “active” environment—a true Agent operating system. It allows Agents to reason (Vector), act (Net), and observe (FDW) through a single standard interface (SQL).

By consolidating data logic into a single ACID engine, the failure domain collapses. Transactions either commit or roll back. This determinism is priceless to Agents—the data layer becomes a reliable primitive, not a distributed chaos full of uncertainty.

The massive acquisitions by Databricks and Snowflake are the ultimate validation: The future of AI is Agentic, and the database for Agents is PostgreSQL.